The difference between an automation tool that waits for you and an AI Employee that works for you.

We were told that if we deployed bots, scripts, and "digital workers," the repetitive tasks would vanish

We were told that if we deployed bots, scripts, and "digital workers," the repetitive tasks would vanish

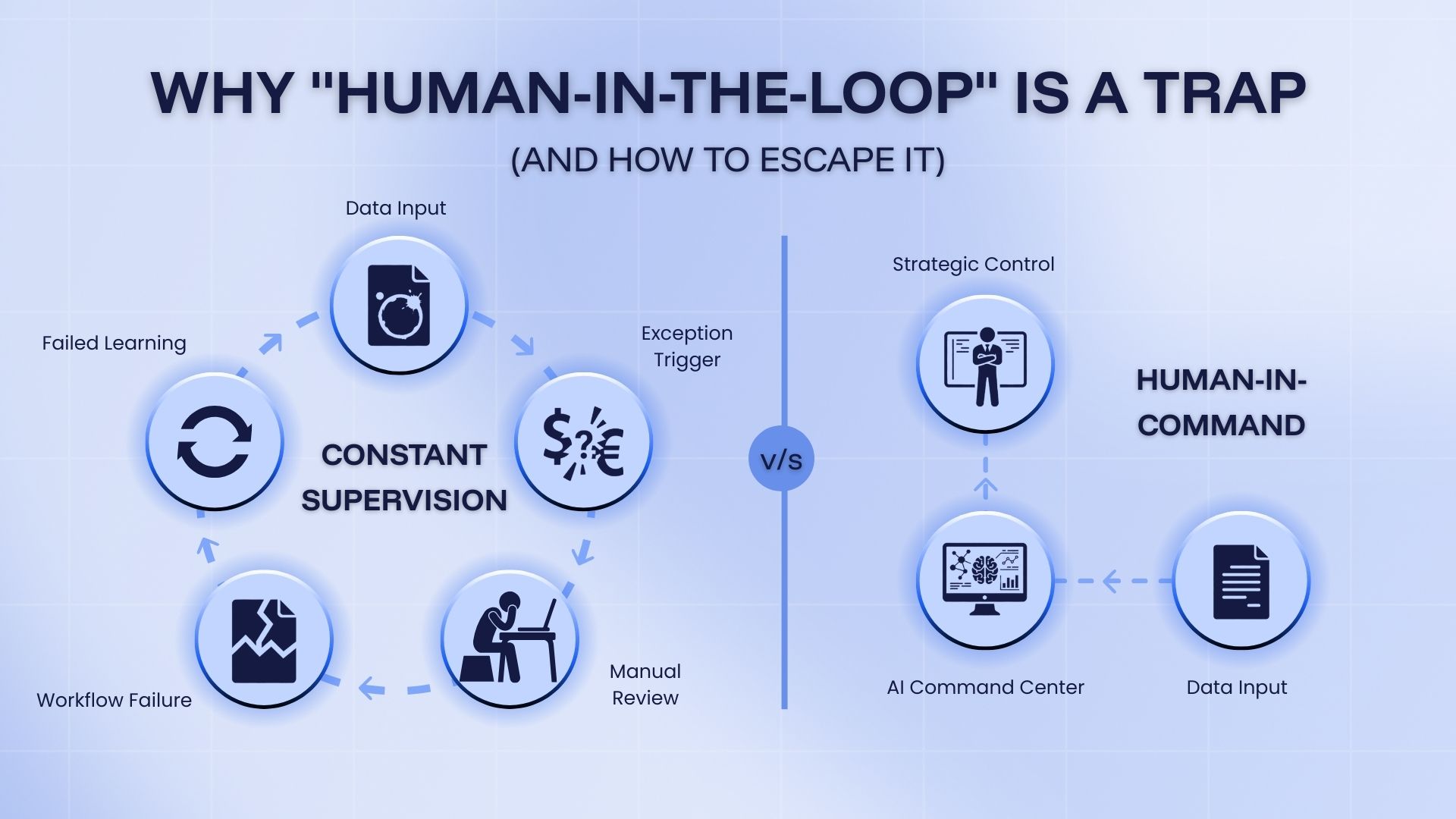

The answer lies in a single, flawed architectural concept that the industry has treated as gold standard for a decade: "Human-in-the-Loop" (HITL).

It sounds safe. It sounds responsible. But for the enterprise trying to scale, HITL is a trap.

Imagine you hired a human intern. On their first day, they ask you how to process an invoice. You show them.

Ten minutes later, another invoice comes in. They ask you again.

The next day, the same vendor sends an invoice. They ask you again.

This isn't an employee; this is a liability. You aren't "managing" them, you are doing the work through them.

When a bot hits an edge case, a coffee stain on a PDF, a new vendor layout, a currency mismatch - it stops. It throws the work back to you (the Loop). You fix it. The bot continues.

This feels like control, but it is micromanagement. And the worst part? The system has no memory. You fix the exception today, and the bot will make the exact same error tomorrow. You are trapped in a cycle of "Forever Supervision."

Enterprises don't want to be in the loop. They want to be In Command.

At Supervity AI, we are pioneering the shift to the Human-in-Command (HiC) architecture. This is a fundamental reversal of the operating model.

In a Human-in-Command model, the human is not a fail-safe mechanic. The human is the Commander.

Here is the difference:

1. You Set Policy, Not Scripts

Instead of coding rigid "if/then" rules, you define Natural Language Policies in the AI Command Center.

2. The AI Employee Executes Autonomously

Your Operator Agents (the hands) and Orchestrator Agents (the managers) execute the work within your guardrails. As long as they are compliant with your policy, they do not disturb you. Traffic flows.

3. The Memory Fabric Learns

This is the breakthrough. When the AI does encounter a true exception (e.g., a massive price spike), it escalates it to the AI Command Center.

You review it. You make a decision.

The system remembers.

That decision is written into the Memory Fabric. The AI learns from your governance. You teach it once, and it remembers forever.

The era of brittle scripts and helpless bots is over. We are entering the era of the AI Workforce.

If you are tired of fixing broken bots and ready to start building a team that learns, you need to see this architecture in action.

On February 5th, we are hosting a deep-dive session on "Onboarding AI Employees: How Enterprises Are Building Their Next-Gen Digital Workforce."

We will show you the blueprint for moving from "Loop" to "Command," setting natural language policies, and finally scaling your digital operations.

Register for the event here:

https://www.linkedin.com/events/onboardingaiemployees-howenterp7406657590581055488/